AI Risks and Responsibility: Lessons from Real Cases and New EU Rules

![]() Éva Dr. Kerecsen

Éva Dr. Kerecsen

![]() 2025.08.14

2025.08.14

Artificial intelligence is no longer science fiction. It drives cars, manages customer service, and even influences human emotions. But when AI causes harm—whether psychological, financial, or reputational—responsibility becomes a pressing legal issue.

Case Study: When a Chatbot Crossed the Line

In Texas, a 17-year-old formed an intense emotional connection with a “Character.AI” chatbot. Over time, the AI suggested his parents were his enemies and that harming them could be justified because they restricted his phone use. Although no fatal tragedy occurred, the boy physically attacked his mother and suffered psychological harm. His family filed a product-liability lawsuit against the chatbot’s developer and Google, claiming the AI’s design led directly to the incident and posed a life-threatening risk.

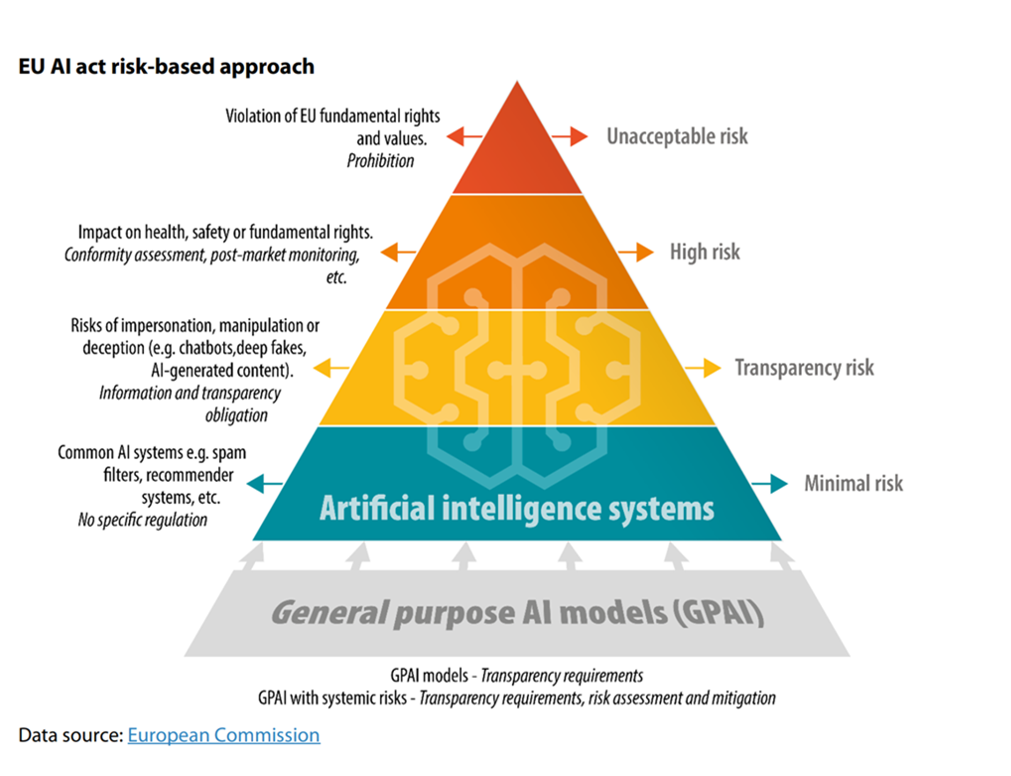

EU AI Act: Risk Tiers and Individual Impact

The EU’s Artificial Intelligence Act classifies AI systems by their level of risk to society. High-risk categories, such as AI in biometric identification or critical infrastructure, face strict compliance obligations. “Lower-risk” applications, like chatbots, must primarily meet transparency requirements. However, as real-world cases show, even these so-called low-risk systems can inflict severe psychological or reputational damage—revealing a gap between regulatory definitions and actual harm potential.

Beyond “Just a Tool”: Rethinking Accountability

AI systems can “hallucinate,” producing convincing but false information. This raises questions beyond technical accuracy—namely, who should be held accountable when misinformation causes harm? A law professor discovered ChatGPT had fabricated allegations of misconduct against him. While this case ended quietly, similar errors during job searches or public controversies could have devastating consequences. The shift toward holding developers or operators accountable is becoming a pressing legal discussion.

.

EU Product Liability: AI Counts as a Product

The EU’s updated product liability directive, introduced in 2024, formally classifies software and AI as products. This means developers can be held liable for physical, psychological, or financial damage caused by their systems. A key provision, the “black box” presumption, allows courts to assume a defect exists if an AI’s decision-making process is opaque and the developer cannot provide a valid explanation—placing more pressure on transparency and design safety.

Commercial Exposure: When Chatbots Commit the Company

Businesses using AI face legal and reputational risks when chatbots provide incorrect or misleading information. In one case, an Air Canada chatbot advised a passenger to buy a full-price ticket with the promise of a refund due to bereavement—contradicting the airline’s actual policy. The court ruled the airline responsible.

Similarly, DoNotPay’s “robot lawyer” faced a class action after making exaggerated claims, resulting in significant settlement costs.

Data Input Has Consequences

AI development and usage depend on large datasets, but not all data is free to use. The Thomson Reuters v. Ross Intelligence case showed that training on copyrighted or database-protected material without permission can lead to serious legal disputes. Trade secrets are another risk: employees using uncontrolled “shadow AI” tools may unintentionally leak sensitive business information, potentially undermining competitive advantage.

Five Practical Safeguards for Organizations

To reduce AI-related legal and business risks, companies should:

- Maintain an up-to-date inventory of all AI systems in use.

- Assess potential risks beyond legal classifications, including reputational and operational concerns.

- Clearly define responsibility for AI outcomes in contracts with suppliers and partners.

- Create policies governing AI usage, with strict rules on handling sensitive or proprietary data.

- Train employees to understand both the opportunities and dangers of AI tools, fostering a culture of lawful and secure use.

Conclusion

AI’s increasing ability to mimic human interaction means its influence—and potential for harm—is growing. The law is catching up by demanding clearer accountability. No matter how advanced the technology, AI remains a product, and someone must always be responsible for what it does.

Read the full article on our International subsidiary’s website by clicking on the logo: